A misconception among many developers is believing that a concurrent solution is always faster than a sequential one. This couldn’t be more wrong. The overall performance of a solution depends on many factors, such as the efficiency of our code structure (concurrency), which parts can be tackled in parallel and the level of contention among the computation units.

This post reminds us about some fundamental knowledge of concurrency in Golang; then, we will see a concrete example where a concurrent solution isn’t necessarily faster.

How Golang Scheduling works

A thread is the smallest unit of processing that an OS can perform. If a process wants to execute multiple actions simultaneously, it spins up multiple threads. These threads can be:

- Concurrent — Two or more threads can start, run, and complete in overlapping periods.

- Parallel — The same task can be executed multiple times at once.

The OS is responsible for scheduling the thread’s processes optimally so that:

- All the threads can consume CPU cycles without being starved for too much time.

- The workload is distributed as evenly as possible among the different CPU cores.

NOTE: The word thread can also have a different meaning at a CPU level. Each physical core can be composed of multiple logical cores (the concept of hyper-threading), and a logical core is also called a thread. In this post, when we use the word thread, we mean the unit of processing, not a logical core.

A CPU core executes different threads. When it switches from one thread to another, it executes an operation called context switching. The active thread-consuming CPU cycles was in an executing state and moves to a runnable state, meaning it’s ready to be executed pending an available core. Context switching is considered an expensive operation because the OS needs to save the current execution state of a thread before the switch (such as the current register values).

As Go developers, we can’t create threads directly, but we can create goroutines, which can be considered application-level threads. However, whereas an OS thread is context-switched on and off a CPU core by the OS, a goroutine is context-switched on and off an OS thread by the Go runtime. Also, compared to an OS thread, a goroutine has a smaller memory footprint: 2 KB for goroutines from Go 1.4. An OS thread depends on the OS, but, for example, on Linux/x86–32, the default size is 2 MB (see https://man7.org/linux/man-pages/man3/pthread_create.3.html). Having a smaller size makes context switching faster.

NOTE: Context switching a goroutine versus a thread is about 80% to 90% faster, depending on the architecture.

Let’s now discuss

How the Golang scheduler works

to overview how goroutines are handled. Internally, the Go scheduler uses the following terminology (see proc.go):

- G — Goroutine

- M — OS thread (stands for machine)

- P — CPU core (stands for processor)

The OS scheduler assigns each OS thread (M) to a CPU core (P). Then, each goroutine (G) runs on an M. The GOMAXPROCS variable defines the limit of Ms in charge of executing user-level code simultaneously. But if a thread is blocked in a system call (for example, I/O), the scheduler can spin up more Ms. As of Go 1.5, GOMAXPROCS is by default equal to the number of available CPU cores.

A goroutine has a simpler lifecycle than an OS thread. It can be doing one of the following:

- Executing — The goroutine is scheduled on an M and executing its instructions.

- Runnable — The goroutine is waiting to be in an executing state.

- Waiting — The goroutine is stopped and pending something completing, such as a system call or a synchronization operation (such as acquiring a mutex).

There’s one last stage to understand about the implementation of Go scheduling: when a goroutine is created but cannot be executed yet; for example, all the other Ms are already executing a G. In this scenario, what will the Go runtime do about it? The answer is queuing. The Go runtime handles two kinds of queues: one local queue per P and a global queue shared among all the Ps.

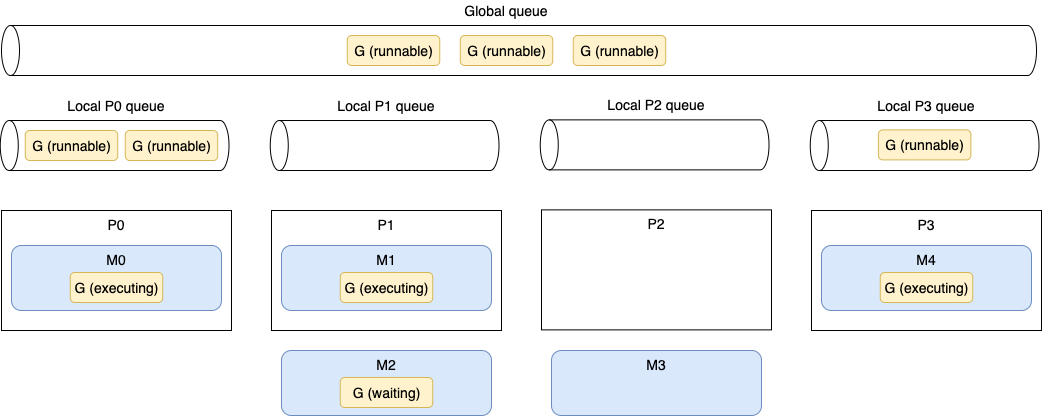

Figure 1 shows a given scheduling situation on a four-core machine with GOMAXPROCS equal to 4. The parts are the logical cores (Ps), goroutines (Gs), OS threads (Ms), local queues, and global queue:

First, we can see five Ms, whereas GOMAXPROCS is set to 4. But as we mentioned, the Golang runtime can create more OS threads than the GOMAXPROCS value if needed.

P0, P1, and P3 are currently busy executing Golang runtime threads. But P2 is presently idle as M3 is switched off P2, and there’s no goroutine to be executed. This isn’t a good situation because six runnable goroutines are pending being executed, some in the global queue and some in other local queues. How will the Go runtime handle this situation? Here’s the scheduling implementation in pseudocode